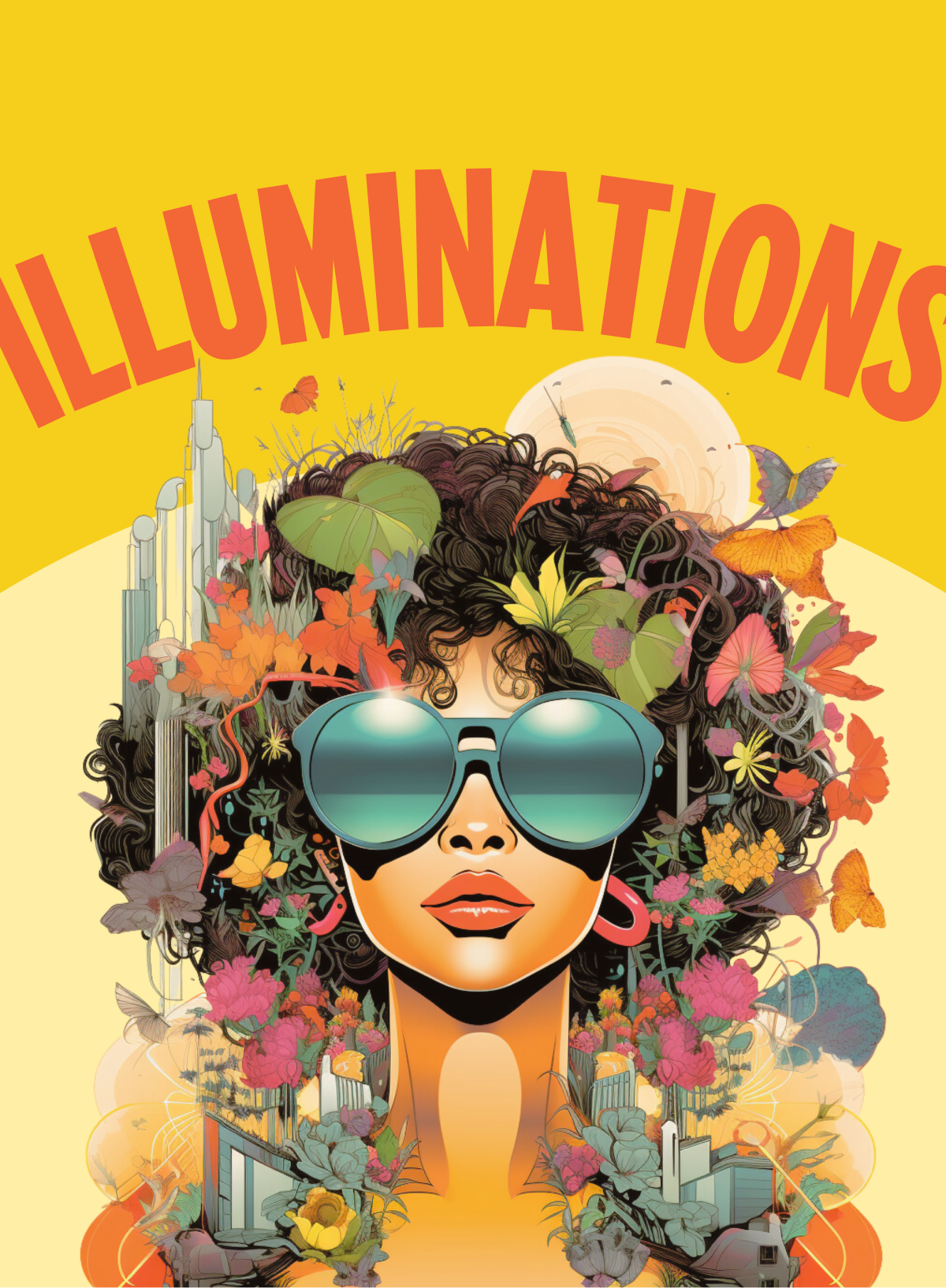

BROUGHT TO YOU BY:INTELLIGENT LIFE

LEAD ARTIST:

ARTIFICIAL INTELLIGENCE

AI conversations happening now could shape generations – and we are living at the center of them.

Wheels. Crops. Gunpowder. Printing press. Lightbulbs. The internet. And today, artificial intelligence.

Each chapter of human history is marked by world-shaping technologies. While each was developed to solve existing problems, they also created new ones. The invention of the lightbulb displaced workers in the gas industry and led to fears of harms by electric illumination. The printing press made knowledge more accessible while also igniting debates over censorship, culture, and misinformation. Agriculture made communities more resilient to famine while also contributing to environmental hazards and more concentrated spread of disease.

For millennia, humans have pursued invention to improve their lives. But new technologies don’t necessarily make us happier, healthier, or safer — and rarely impact all communities equally. Where they help and where they harm is often determined by the priorities of the people who create them, and the systems built to support them.

Artificial intelligence has the potential to touch every major pillar in society, from personalized medicine to synthetic relationships to transportation. Advocates share visions of efficiency and abundance, while critics point to existing flaws that codify bias or risk catastrophe.

San Francisco is at the center of these conversations, which makes all of us uniquely positioned to have a voice. To guide you, our team gathered key vocabulary, dilemmas, opportunities, and cautions for you to explore. The choices we make, experiments we try, and the values we prioritize could shape how future generations discuss the impacts of this chapter. No one knows all of the right answers, but it’s our collective responsibility to ask the right questions.

QUICK VIEW

DILEMMA

Throughout history, many societies have been fascinated by the quest for universal knowledge. Today's AI researchers dream of creating a General Artificial Intelligence (GAI) that possesses broad knowledge across many domains. Current AI models are much more specific – they are trained to complete specific tasks that, even if complex, don't replicate human intelligence.

While the potential for a collaborative human-AI partnership is exciting, there's also apprehension.

Many experts believe that GAI isn't possible, but critics who believe it is are concerned about what happens if it becomes uncontrollable or develops intentions misaligned with ours. As we edge closer to this potential future, how do we ensure we're prepared for all possible outcomes?

Challenges and opportunities.

AI is complicated and has the potential to transform our systems, solve many problems, and / or amplify our biases and redistribute our challenges in high tech form.

The impacts span industries. Choose one you care about –like jobs, art, healthcare, education, or transporation – and do a little research into the potential benefits and risks of AI in that space. Keep an open mind and try to make a strong case across the nuances. What needs to be done to pursue the benefits while protecting against the risks? And who, in particuarly, would be most harmed by the risks?

BRING HOME THE EXHIBIT

SIGNATURE SCENT

Cardamom, Orange Peel, Sea Salt, PalmWe partnered with Casita Michi to create custom, limited edition candles inspired by the themes of each exhibit.

SIGNATURE COCKTAIL

cazadores blanco, watermelon, redbull, limeThe baristas at The Foundry created custom cocktails (and mocktails) inspired by the themes of each exhibit. Incorporate their recipes into your home gatherings to get a taste of the exhibit.

MEDIA RESOURCES

What to check out next.

WORD BANK

“As a society, we do not have to let AI be used in harmful ways. We could instead craft our society to use AI only for jobs that people don’t want to do. We could have it help us with the tedious and boring things, which could allow us to all have more time and for more resources to be dedicated to being creative and doing things that we enjoy.

That, of course, will require some shifting priorities and values in our society as a whole (especially at the top), but it is possible.””

— gracie ermie

MACHINE LEARNING SCIENtIST

“intelligent life” contributor

SCIENCE SPOTLIGHT

Would you trust a chatbot to provide an accurate medical diagnosis?

AI has already helped analyze medical images, detect drug interactions, and identify high-risk patients. But, many people are not sure about the next use - a diagnostic chatbot.

A qualitative study on the emergence of trust in diagnostic chatbots found that people see the benefits of interacting with both their physician and AI technology [1]. The difference was that trust in a chatbot was something the patient actively chose, while trusting their physician was affect-based. They also found evidence that a chatbot’s communication competencies are more important than empathetic reactions. We seem to understand that AI can be useful in understanding and disseminating complex medical information, but its inability to “feel” human emotions may make it hard to trust.

Since that study, AI chatbots have gotten a reboot. In March 2023 GPT-4 chatbots entered the scene and can now provide more accurate diagnoses, save both patients’ and doctors’ time, and even provide a more detailed explanation of a given disease or illness.

While GPT-4 is not trained specifically for health care or medical applications, it does have access to a tremendous amount of data from open sources on the Internet including medical texts, research papers, health system websites, and openly available health information podcasts and videos [2]. GPT-4 has been successful in taking medical notes, providing follow up suggestions to physicians, and catching its own “hallucinations” or mistakes. When prompted to fix its mistakes, the bot learns to not make them in the future. It also contains medical knowledge; it answers US Medical Licensing Exam questions correctly more than 90% of the time! This medical knowledge could be used in consultation, diagnosis, education, and far more. It’s not ready for prime time yet in many cases, and we would definitely recommend sticking with your doctor for now, but how do we prepare for the future of AI in healthcare?

Some of the underlying hazards and mistrust in using AI in healthcare center on demographic bias. Currently, race, sexuality, gender, and income status influence the way patients are treated both consciously and subconsciously by their healthcare providers. AI has the potential to exacerbate those inequities or to resolve them, and depending on how you train it.

A 2019 study shows how a biased algorithm provided the same risk ratings for black patients who were considerably sicker than white patients because it was designed to use data from patients’ past health care spending, information that does not reflect health but income [3]. However, AI can also be used to support healthcare equity. Another study showed that an ethically trained algorithm was able to reduce racial disparities in pain by 4.7x relative to standard measurements done by a human radiologist [4].

So, what’s next? Current capabilities of AI in healthcare are just the tip of the iceberg, and new challenges will come with each advancement. While physicians can start to increase the trust their patients have in diagnostic chatbots by helping to improve the accuracy of their outputs, nudge patients to use them, and provide a listening experience alongside the chatbot’s diagnosis, both healthcare providers and researchers in the field should take caution to prevent misdiagnosis due to their own inherent bias imprinted onto algorithms.

[1] Seitz, Lennart, Sigrid Bekmeier-Feuerhahn, and Krutika Gohil. "Can we trust a chatbot like a physician? A qualitative study on understanding the emergence of trust toward diagnostic chatbots." International Journal of Human-Computer Studies 165 (2022): 102848.

[2] Lee, Peter, Sebastien Bubeck, and Joseph Petro. "Benefits, limits, and risks of GPT-4 as an AI chatbot for medicine." New England Journal of Medicine 388.13 (2023): 1233-1239.

[3] Obermeyer, Ziad, et al. "Dissecting racial bias in an algorithm used to manage the health of populations." Science 366.6464 (2019): 447-453.

[4] Pierson, Emma, et al. "An algorithmic approach to reducing unexplained pain disparities in underserved populations." Nature Medicine 27.1 (2021): 136-140.

FIELD SPOTLIGHTS

There are many leaders currently shaping and debating the intersectino of AI and society.

Insider Insights

Takeaways that stuck with us from contributing experts.

Follow the conversations

We’ve compiled lists of leaders in the space. While we can’t be responsible for what they say, we wanted to share a list of people that came up in our research.

REAL TALK

Ideas for action.

A lot of the challenges around AI in society are emerging and will require collective solutions. We talked to the experts about what you can do, too.

JOIN THE CONVERSATION.

Check out platforms like All Tech is Human to participate in ongoing dialogues and learn about what is happening and what it possible in AI. Their Slack community currently has 6k members across 78 countries.

LOOK AT MULTIPLE SIDES OF ISSUES.

By actively seeking diverse perspectives, staying informed, and continuously reassessing your values, you can navigate complex AI debates with a well-informed and ethically grounded perspective. Look for resource list on organizational and scholar’s websites to get started.

ATTEND LOCAL EVENTS.

There’s a lot going on in San Francisco in this space. From panels to hackathons to meet-ups to discuss the issues and policies, our city has a growing AI community with a wide range of perspectives. Follow groups like TED AI, SHACK15, and Cerebral Valley to get looped into the conversations.

ORG SPOTLIGHTS

THE ARTIST

Creative platforms producing (debatably) original artworks have been on the rise in the past year, and the ethics remain critical and complex. We used AI to shape the visuals in this exhibit and primary graphic for the showcase. In this section, you can see some of our team’s drafts and attempts at working with Midjourney to produce a piece of art.

LEAD ARTIST

Artificial Intelligence

THANK YOU TO OUR CONTRIBUTORS

In addition to the countless authors and creators whose work we reviewed, we wanted to thank the experts who took the time to formally share their thoughts with us. While they’re not responsible for anything we got wrong, they may be responsible for a lot of what we got right!

Astrid Willis Countee, Data Anthropologist & Technologist

David Kelley, PhD, Computational Biologist & Machine Learning Specialist

Gracie Ermi, Machine Learning Scientist at Impact Observator

John Zimmerman, AI Professor

Theresa Harris, Program Director, AAAS

ILLUMINATIONS WAS MADE POSSIBLE BY:COMMUNITY PARTNERS:SUSTAINING PATRONS:Andrew Watson

Camilla Rockefeller

David Kelley

Hillari & Michael Sasse

Wendi Zhang

CONTRIBUTORS:Astrid Countee, Research Associate

Camille Rose, Photographer

Crystal Dilworth, PhD, Board Member

Daniel Aguirre, Community Organizer

Esther Knox-Dekoning, Web Designer & Developer

Erick Salazar, Videographer + Photographer

Lindsay Newey, Animator

Kathleen Sheffer, Photographer

Maya Bialik, EdM, Board Member

Melissa Pappas, Arts & Exhibits Collaborator

Michelle Paull, Strategic Advisor

Sam Galison, Design Engineer

Samuel Knox, Software Developer

Stacey Baker, Research Associate

VOLUNTEERS:Alex Collins

Amanda Vera

Ana Ostrovsky

Andrew Graves

Bo Bradrich

David Kelley

Hillari Fine Sasse

Jimmy Maley

Kim Pedersen

Kim Tereco

Marques Jackson

Mauri Sanchez

Melissa Pappas

Michael Sasse

Negin Hemati

Pam Pedersen

Sandy Delgado

Spencer Kerber

Su Chon

Tracy Silver

Victoria Becker

Zoe Austin

THE PLENARY, CO. TEAM:Stephanie Fine-Sasse, Founder + Director

Ashley Cortés Hernández, Clubhouse Director

Christine La, Community Content Director

Samar Ibrahim, Community Engagement Manager